实验2 熟悉常用的 HDFS 操作 1.实验目的 (1) 理解 HDFS 在 Hadoop 体系结构中的角色;

(2) 熟练使用 HDFS 操作常用的 Shell 命令;

(3) 熟悉 HDFS 操作常用的 Java API。

2.实验平台 (1) 操作系统: Linux(Ubuntu-22.04.2-desktop-amd64);

(2) Hadoop 版本: 3.1.3;

(3) JDK 版本: 1.8;

(4) Java IDE: Eclipse。

3.实验步骤与结果 (一)编程实现以下功能,并利用 Hadoop 提供的 Shell 命令完成相同任务:

(1) 向 HDFS 中上传任意文本文件,如果指定的文件在 HDFS 中已经存在,则由用户来指定是追加到原有文件末尾还是覆盖原有的文件;

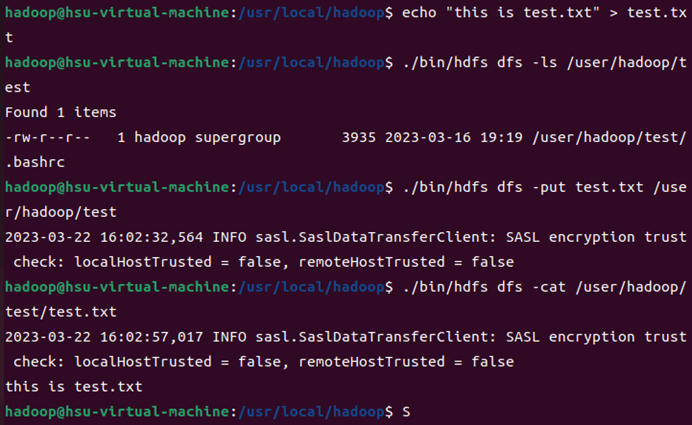

Shell:

上传:

若已存在——追加:

若已存在——覆盖:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 import org.apache.hadoop.fs.FSDataOutputStream;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path;import java.io.FileInputStream;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi { public static boolean test (Configuration conf, String path) throws IOException { FileSystem fs = FileSystem.get(conf); return fs.exists(new Path (path)); } public static void copyFromLocalFile (Configuration conf, String localFilePath, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path localPath = new Path (localFilePath); Path remotePath = new Path (remoteFilePath); fs.copyFromLocalFile(false , true , localPath, remotePath); fs.close(); } public static void appendToFile (Configuration conf, String localFilePath, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FileInputStream in = new FileInputStream (localFilePath); FSDataOutputStream out = fs.append(remotePath); byte [] data = new byte [1024 ]; int read = -1 ; while ( (read = in.read(data)) > 0 ) { out.write(data, 0 , read); } out.close(); in.close(); fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String localFilePath = "/usr/local/hadoop/1.txt" ; String remoteFilePath = "1.txt" ; String choice = "append" ; try { Boolean fileExists = false ; if (HDFSApi.test(conf, remoteFilePath)) { fileExists = true ; System.out.println(remoteFilePath + " 已存在." ); } else { System.out.println(remoteFilePath + " 不存在." ); } if ( !fileExists) { HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已上传至 " + remoteFilePath); } else if ( choice.equals("overwrite" ) ) { HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已覆盖 " + remoteFilePath); } else if ( choice.equals("append" ) ) { HDFSApi.appendToFile(conf, localFilePath, remoteFilePath); System.out.println(localFilePath + " 已追加至 " + remoteFilePath); } } catch (Exception e) { e.printStackTrace(); } } }

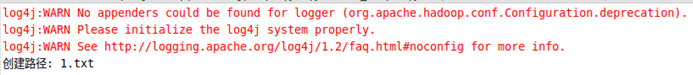

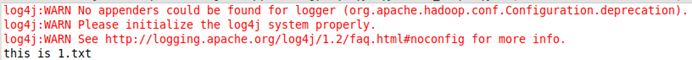

1.fil1.txt 不存在情况下:

2.file1.txt 存在情况下:

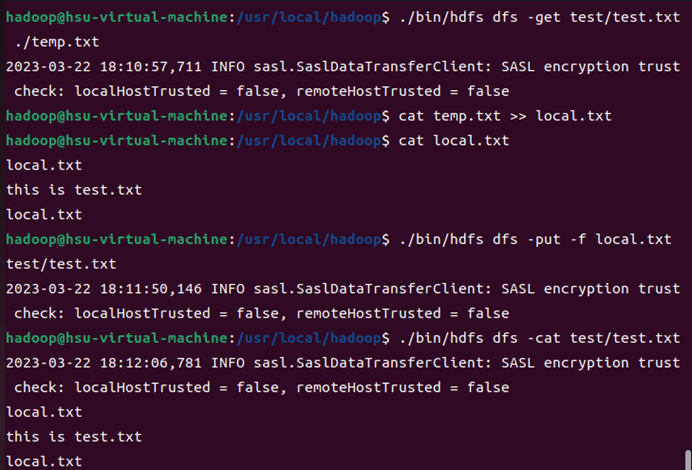

(2) 从 HDFS 中下载指定文件,如果本地文件与要下载的文件名称相同,则自动对下载的文件重命名;

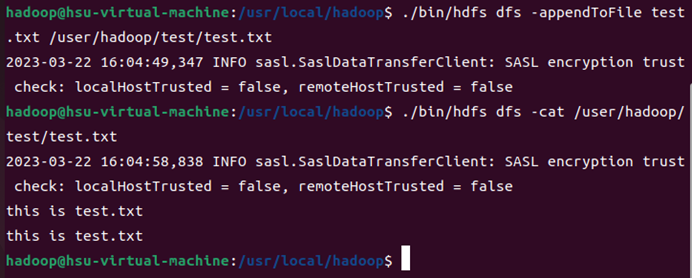

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi2 { public static void copyToLocal (Configuration conf, String remoteFilePath, String localFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); File f = new File (localFilePath); if (f.exists()) { System.out.println(localFilePath + " 已存在." ); Integer i = 0 ; while (true ) { f = new File (localFilePath + "_" + i.toString()); if (!f.exists()) { localFilePath = localFilePath + "_" + i.toString(); break ; } } System.out.println("将重新命名为: " + localFilePath); } Path localPath = new Path (localFilePath); fs.copyToLocalFile(remotePath, localPath); fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String localFilePath = "/usr/local/hadoop/2.txt" ; String remoteFilePath = "1.txt" ; try { HDFSApi2.copyToLocal(conf, remoteFilePath, localFilePath); System.out.println("下载完成" ); } catch (Exception e) { e.printStackTrace(); } } }

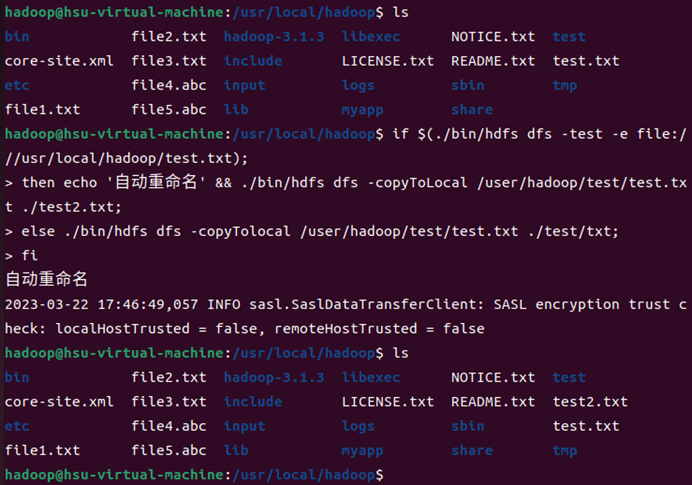

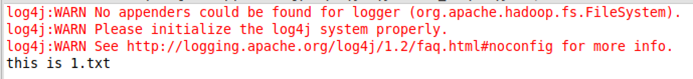

1文件已存在:

2文件不存在:

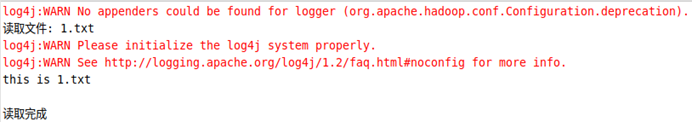

(3) 将 HDFS 中指定文件的内容输出到终端中;

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi3 { public static void cat (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FSDataInputStream in = fs.open(remotePath); BufferedReader d = new BufferedReader (new InputStreamReader (in)); String line = null ; while ( (line = d.readLine()) != null ) { System.out.println(line); } d.close(); in.close(); fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "1.txt" ; try { System.out.println("读取文件: " + remoteFilePath); HDFSApi3.cat(conf, remoteFilePath); System.out.println("\n读取完成" ); } catch (Exception e) { e.printStackTrace(); } } }

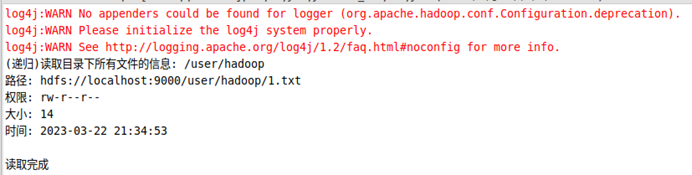

(4) 显示 HDFS 中指定的文件的读写权限、大小、创建时间、路径等信息;

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;import java.text.SimpleDateFormat;public class HDFSApi4 { public static void ls (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FileStatus[] fileStatuses = fs.listStatus(remotePath); for (FileStatus s : fileStatuses) { System.out.println("路径: " + s.getPath().toString()); System.out.println("权限: " + s.getPermission().toString()); System.out.println("大小: " + s.getLen()); Long timeStamp = s.getModificationTime(); SimpleDateFormat format = new SimpleDateFormat ("yyyy-MM-dd HH:mm:ss" ); String date = format.format(timeStamp); System.out.println("时间: " + date); } fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "1.txt" ; try { System.out.println("读取文件信息: " + remoteFilePath); HDFSApi4.ls(conf, remoteFilePath); System.out.println("\n读取完成" ); } catch (Exception e) { e.printStackTrace(); } } }

(5) 给定 HDFS 中某一个目录,输出该目录下的所有文件的读写权限、大小、创建时间、路径等信息,如果该文件是目录,则递归输出该目录下所有文件相关信息;

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;import java.text.SimpleDateFormat;public class HDFSApi5 { public static void lsDir (Configuration conf, String remoteDir) throws IOException { FileSystem fs = FileSystem.get(conf); Path dirPath = new Path (remoteDir); RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true ); while (remoteIterator.hasNext()) { FileStatus s = remoteIterator.next(); System.out.println("路径: " + s.getPath().toString()); System.out.println("权限: " + s.getPermission().toString()); System.out.println("大小: " + s.getLen()); Long timeStamp = s.getModificationTime(); SimpleDateFormat format = new SimpleDateFormat ("yyyy-MM-dd HH:mm:ss" ); String date = format.format(timeStamp); System.out.println("时间: " + date); System.out.println(); } fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteDir = "/user/hadoop" ; try { System.out.println("(递归)读取目录下所有文件的信息: " + remoteDir); HDFSApi5.lsDir(conf, remoteDir); System.out.println("读取完成" ); } catch (Exception e) { e.printStackTrace(); } } }

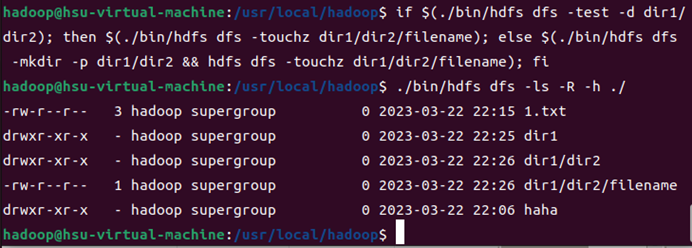

(6) 提供一个 HDFS 内的文件的路径,对该文件进行创建和删除操作。如果文件所在目录不存在,则自动创建目录;

Shell:

创建:

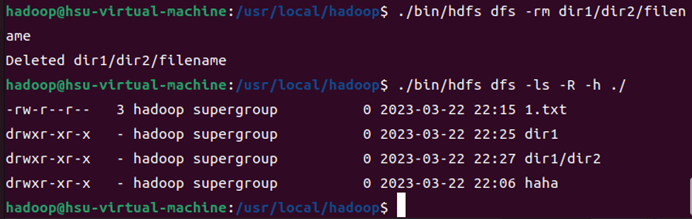

删除:

Java:

1自动创建目录文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi6 { public static boolean test (Configuration conf, String path) throws IOException { FileSystem fs = FileSystem.get(conf); return fs.exists(new Path (path)); } public static boolean mkdir (Configuration conf, String remoteDir) throws IOException { FileSystem fs = FileSystem.get(conf); Path dirPath = new Path (remoteDir); boolean result = fs.mkdirs(dirPath); fs.close(); return result; } public static void touchz (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FSDataOutputStream outputStream = fs.create(remotePath); outputStream.close(); fs.close(); } public static boolean rm (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); boolean result = fs.delete(remotePath, false ); fs.close(); return result; } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "1.txt" ; String remoteDir = "/user/hadoop" ; try { if ( HDFSApi6.test(conf, remoteFilePath) ) { HDFSApi6.rm(conf, remoteFilePath); System.out.println("删除路径: " + remoteFilePath); } else { if ( !HDFSApi6.test(conf, remoteDir) ) { HDFSApi6.mkdir(conf, remoteDir); System.out.println("创建文件夹: " + remoteDir); } HDFSApi6.touchz(conf, remoteFilePath); System.out.println("创建路径: " + remoteFilePath); } } catch (Exception e) { e.printStackTrace(); } } }

2删除目录:

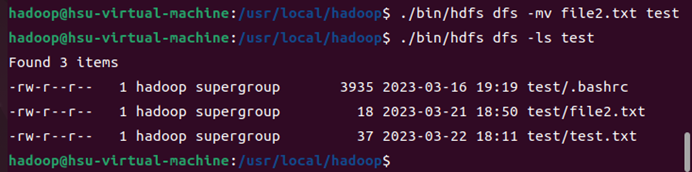

(7) 提供一个 HDFS 的目录的路径,对该目录进行创建和删除操作。创建目录时,如果目录文件所在目录不存在,则自动创建相应目录;删除目录时,由用户指定当该目录不为空时是否还删除该目录;

Shell:

\1. 删除文件:

\2.

\3. 创建目录:

\4.

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi7 { public static boolean test (Configuration conf, String path) throws IOException { FileSystem fs = FileSystem.get(conf); return fs.exists(new Path (path)); } public static boolean isDirEmpty (Configuration conf, String remoteDir) throws IOException { FileSystem fs = FileSystem.get(conf); Path dirPath = new Path (remoteDir); RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true ); return !remoteIterator.hasNext(); } public static boolean mkdir (Configuration conf, String remoteDir) throws IOException { FileSystem fs = FileSystem.get(conf); Path dirPath = new Path (remoteDir); boolean result = fs.mkdirs(dirPath); fs.close(); return result; } public static boolean rmDir (Configuration conf, String remoteDir) throws IOException { FileSystem fs = FileSystem.get(conf); Path dirPath = new Path (remoteDir); boolean result = fs.delete(dirPath, true ); fs.close(); return result; } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteDir = "/user/hadoop/dir1/dir2" ; Boolean forceDelete = false ; try { if ( !HDFSApi7.test(conf, remoteDir) ) { HDFSApi7.mkdir(conf, remoteDir); System.out.println("创建目录: " + remoteDir); } else { if ( HDFSApi7.isDirEmpty(conf, remoteDir) || forceDelete ) { HDFSApi7.rmDir(conf, remoteDir); System.out.println("删除目录: " + remoteDir); } else { System.out.println("目录不为空,不删除: " + remoteDir); } } } catch (Exception e) { e.printStackTrace(); } } }

1.删除目录:

2.创建目录:

(8) 向 HDFS 中指定的文件追加内容,由用户指定内容追加到原有文件的开头或结尾;

Shell:

追加到结尾:

追加到原文件的开头,在 HDFS 中不存在与这种操作对应的命令,因此,无法使用一条

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi8 { public static boolean test (Configuration conf, String path) throws IOException { FileSystem fs = FileSystem.get(conf); return fs.exists(new Path (path)); } public static void appendContentToFile (Configuration conf, String content, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FSDataOutputStream out = fs.append(remotePath); out.write(content.getBytes()); out.close(); fs.close(); } public static void appendToFile (Configuration conf, String localFilePath, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FileInputStream in = new FileInputStream (localFilePath); FSDataOutputStream out = fs.append(remotePath); byte [] data = new byte [1024 ]; int read = -1 ; while ( (read = in.read(data)) > 0 ) { out.write(data, 0 , read); } out.close(); in.close(); fs.close(); } public static void moveToLocalFile (Configuration conf, String remoteFilePath, String localFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); Path localPath = new Path (localFilePath); fs.moveToLocalFile(remotePath, localPath); } public static void touchz (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FSDataOutputStream outputStream = fs.create(remotePath); outputStream.close(); fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("dfs.client.block.write.replace-datanode-on-failure.policy" , "NEVER" ); conf.setBoolean("dfs.client.block.write.replace-datanode-on-failure.enabled" , true ); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "1.txt" ; String content = "新追加的内容\n" ; String choice = "after" ; try { if ( !HDFSApi.test(conf, remoteFilePath) ) { System.out.println("文件不存在: " + remoteFilePath); } else { if ( choice.equals("after" ) ) { HDFSApi8.appendContentToFile(conf, content, remoteFilePath); System.out.println("已追加内容到文件末尾" + remoteFilePath); } else if ( choice.equals("before" ) ) { String localTmpPath = "/user/xusheng/tmp.txt" ; HDFSApi8.moveToLocalFile(conf, remoteFilePath, localTmpPath); HDFSApi8.touchz(conf, remoteFilePath); HDFSApi8.appendContentToFile(conf, content, remoteFilePath); HDFSApi.appendToFile(conf, localTmpPath, remoteFilePath); System.out.println("已追加内容到文件开头: " + remoteFilePath); } } } catch (Exception e) { e.printStackTrace(); } } }

(9) 删除 HDFS 中指定的文件;

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi9 { public static boolean rm (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); boolean result = fs.delete(remotePath, false ); fs.close(); return result; } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "1.txt" ; try { if ( HDFSApi9.rm(conf, remoteFilePath) ) { System.out.println("文件删除: " + remoteFilePath); } else { System.out.println("操作失败(文件不存在或删除失败)" ); } } catch (Exception e) { e.printStackTrace(); } } }

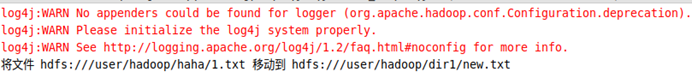

(10) 在 HDFS 中,将文件从源路径移动到目的路径。

Shell:

Java:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import java.io.*;public class HDFSApi10 { public static boolean mv (Configuration conf, String remoteFilePath, String remoteToFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path srcPath = new Path (remoteFilePath); Path dstPath = new Path (remoteToFilePath); boolean result = fs.rename(srcPath, dstPath); fs.close(); return result; } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "hdfs:///user/hadoop/haha/1.txt" ; String remoteToFilePath = "hdfs:///user/hadoop/dir1/new.txt" ; try { if ( HDFSApi10.mv(conf, remoteFilePath, remoteToFilePath) ) { System.out.println("将文件 " + remoteFilePath + " 移动到 " + remoteToFilePath); } else { System.out.println("操作失败(源文件不存在或移动失败)" ); } } catch (Exception e) { e.printStackTrace(); } } }

(二)编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,要求如下:实现按行读取 HDFS 中指定文件的方法“readLine()”,如果读到文件末尾,则返回空,否则返回文件一行的文本。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FSDataInputStream;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path;import java.io.*;public class MyFSDataInputStream extends FSDataInputStream { public MyFSDataInputStream (InputStream in) { super (in); } public static String readline (BufferedReader br) throws IOException { char [] data = new char [1024 ]; int read = -1 ; int off = 0 ; while ( (read = br.read(data, off, 1 )) != -1 ) { if (String.valueOf(data[off]).equals("\n" ) ) { off += 1 ; break ; } off += 1 ; } if (off > 0 ) { return String.valueOf(data); } else { return null ; } } public static void cat (Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path (remoteFilePath); FSDataInputStream in = fs.open(remotePath); BufferedReader br = new BufferedReader (new InputStreamReader (in)); String line = null ; while ( (line = MyFSDataInputStream.readline(br)) != null ) { System.out.println(line); } br.close(); in.close(); fs.close(); } public static void main (String[] args) { Configuration conf = new Configuration (); conf.set("fs.default.name" ,"hdfs://localhost:9000" ); String remoteFilePath = "/user/hadoop/1.txt" ; try { MyFSDataInputStream.cat(conf, remoteFilePath); } catch (Exception e) { e.printStackTrace(); } } }

( 三 ) 查 看 Java 帮 助 手 册 或 其 它 资 料 , 用 “java.net.URL” 和 “org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 import org.apache.hadoop.fs.*;import org.apache.hadoop.io.IOUtils;import java.io.*;import java.net.URL;public class HDFSApi11 { static { URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory ()); } public static void main (String[] args) throws Exception { String remoteFilePath = "hdfs://localhost:9000//user/hadoop/1.txt" ; InputStream in = null ; try { in = new URL (remoteFilePath).openStream(); IOUtils.copyBytes(in,System.out,4096 ,false ); } finally { IOUtils.closeStream(in); } } }

4.实验总结 (1

实验完成率:100%

(2

问题1:对Java 语言不熟悉

解决:参考菜鸟教程https://www.runoob.com/java/java-tutorial.html 中的Java 教程,对Java 的语法等有了一个大致的了解

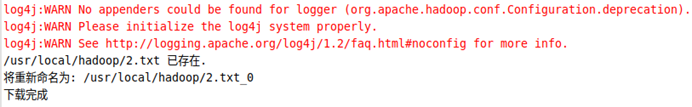

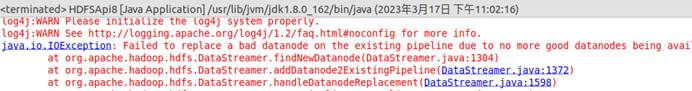

问题2:程序出现如下报错:

java.io.IOException: Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try. (Nodes: current=[DatanodeInfoWithStorage[127.0.0.1:9866,DS-d6371aa4-2270-413c-92b7-5ca51a9b6a28,DISK]], original=[DatanodeInfoWithStorage[127.0.0.1:9866,DS-d6371aa4-2270-413c-92b7-5ca51a9b6a28,DISK]]). The current failed datanode replacement policy is DEFAULT, and a client may configure this via ‘dfs.client.block.write.replace-datanode-on-failure.policy’ in its configuration.

解决: 在Java程序中增加下面两行:

//Configuration conf = new Configuration();

conf.set(“dfs.client.block.write.replace-datanode-on-failure.policy”, “NEVER”);

conf.setBoolean(“dfs.client.block.write.replace-datanode-on-failure.enabled”, true);

问题3:eclipse运行java程序时出现错误

Class org.apache.hadoop.hdfs.DistributedFileSystem not found

解决:查阅资料后,将hadoop-hdfs-client-3.1.1.jar导入后,成功运行

原因:Class org.apache .hadoop.hdfs.DistributedFileSystem由原本的hadoop-hdfs.2.7.1.jar中迁移到了hadoop-hdfs-client-3.1.1.jar 或更高版本

问题4:Syntax error on token “*”,;expected after this token

解决:Java语法错误,在报错处加;(分号)即可;